Some advice for training a Transformer in a new language

Training a Transformer

Viktor Alm

I like to build stuff

Introduction

Continuing the pre-training process and making a "Domain adaptation" of an existing model can be a quick and easy way to get some extra percentage points in accuracy. If you are going to do different classification tasks where bias in models' data can have negative consequences, you must have total control over everything the model has seen and then you have no alternative but to train from scratch. Maybe you have extra long examples in your datasets or a domain with very specific jargon. There may not even be trained models in the language you want to use from the beginning. These are all valid reasons to get into pre-training of models.

Model

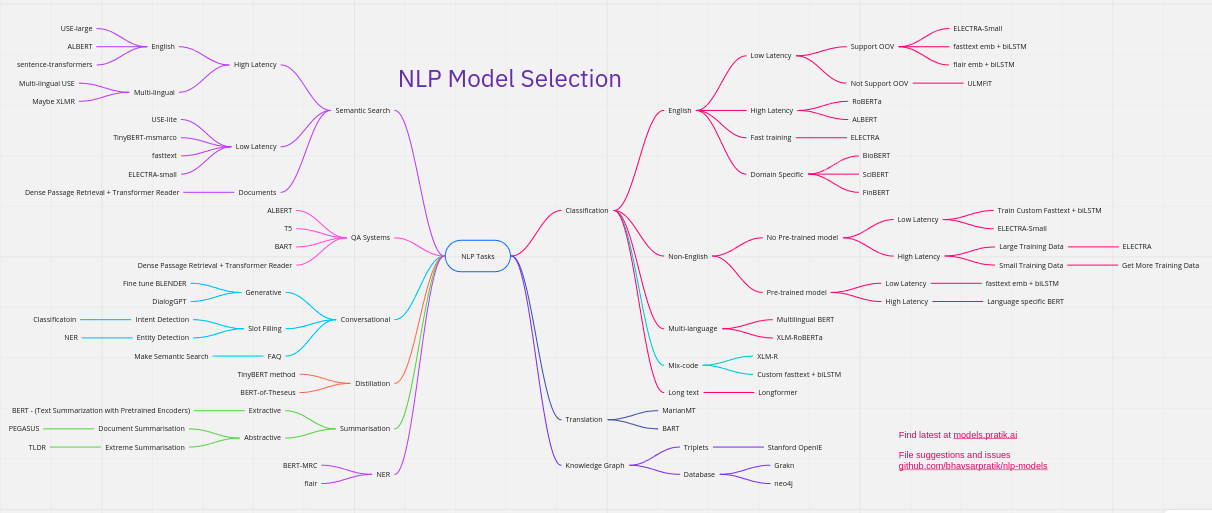

Which model should you choose? It depends on the problem you want to solve.

How much compute do you have? How much data do you have? How much help can you get? How much time do you have? How much can inference cost? Transform text? T5, BART. Understand text? Electra, Roberta. Fast inference? Prune, distill, ALBERT. Write? GPT

Data

It all starts with data. The optimal situation would be that you have a large amount of data from similar processes you intend to implement the model on. If you want a model for assessing school exams about the Roman Empire, it can be good to have general data about antiquity and other history submissions before you move on to training the model to specifically correct texts about the Roman Empire. If you have found a new exciting architecture or just want to experiment, there are open data that do not require different organizations' closed data.

Trying to balance datasets and get them to follow the prevailing culture's perceptions of different words is extremely difficult. The model becomes a direct reflection of the data. In itself, it becomes a general reflection of how people use language, but not necessarily how you want or think it should be. Here it becomes almost necessary to go into gray areas around data collection if you are looking to create a model with a consciously designed language understanding. Always think about how balanced it is, how well it reflects reality and / or the goal you want to achieve. You also need insane amounts.

Tokenization

Tokenization works as a kind of compression / division of words. You can see demos of this on my page "Högskoleprovet". Usually word comprehension / context comprehension works well with only a few letters per token, but the total length of text the model can read is definitely limited by the fact that each token only consists of a few letters instead of words. It is therefore usually wiser to train with your own vocab. I have tried to train different English models that had ÅÄÖ in the vocabulary but the text length is halved. It may be an idea to extend the vocabulary to utilize the resources put into training the model if you want to do a POC.

Here are some of the methods and no one truely feels optimal. There are BPE-based and sentencepiece with unigram. Unfortunately, Swedish has a lot of compound words for which these algorithms are not made. Right now I think we will end up with some character-based layers that then move towards words and possibly sentences / paragraphs.

Encode / Decode data

When you take a dataset and tokenize it with your vocab, it is extremely important to check how it works. Many tokenizers are specialized to be in just one language. Bert / Albert / Electra's tokenizer is one such example.

If you want one with lowercase, it always strips accents:

if self.do_lower_case:

token = token.lower()

token = self._run_strip_accents(token)text = unicodedata.normalize("NFD", text)

Or this in Albert's tokenizer:

outputs = unicodedata.normalize("NFKD", outputs)

english_chars = set(list("abcdefghijklmnopqrstuvwxyz"))

The key is to test, test before you start training for real. It can be costly to train a model like this, and you do NOT want to be wrong. Tokenize to ids and back. Convert your TFRecords back to text and see if something changed. Check out the masks. Definitely take a few days to get acquainted with how this works before you start. Because it's usually not just in one file that hardcoded stuff like this lurks.

Pretraining

For Decoders / Auto-regressive models, the training task is quite simple. Guess the next token. When it comes to Encoders or Decoders, it is not as simple.

Compute/FLOPS

Google has TPUs and GPUs in the Google Cloud Platform. Most models available are directly compatible with TPUs and are ready to go!

Encoder

Masked Language Modeling. BERT masks tokens in training samples. Generating these training samples on over 100GB of data with maybe 40 different generated masks from one sample generates insane amounts of text data. Multiple TBs. I limited myself to 25 examples with a few runs. Renting servers to generate all that data was expensive. Several tens of thousands of kronor, but it saved me some time for that method. It reduces randomness in training and makes each run see approximately the same tasks. However, this does not matter much if you only want to train a couple of models. Therefore, you can just as easily generate samples on the fly. Then there are different strategies for how to mask tokens. Do you mask tokens, words or spans? What tokens do you mask? This is something you have too look into a bit. Then there are variants such as XLNet and Electra.

Electra uses an MLM model called a generator and a model that guesses which tokens have been changed. This is a VERY effective method for training models. Sometimes up to 1/4 of the compute.

Encoder/Decoder

Here I have not had time to experiment enough yet. Only trained one model from scratch. English to Swedish, but i have a few more experiments underway. Here is a bit of a mix of how to train and what methods. T5, BART, MARGE, back-translation, de-noising etc. I will add here after I have a little more practical experience from Pre-training on these models.

Training Parameters

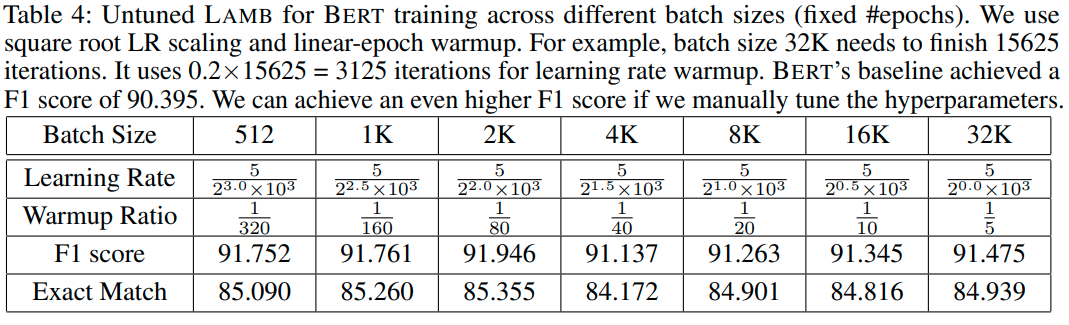

This is about reading papers for optimizers and checking the appendix. Often it is specified somewhere. I see alot of github issues on how to do it, or copy-paste from the first example that does not match their case at all and then trained for several weeks, people with PhDs... It is difficult to find good examples when things are new and it is largely a matter of testing and reading more papers to do the most correct thing at the moment. It's not as easy as just following the docs for some framework. Most are undocumented and sometimes you are lucky with a little code comment or some appendix. Even when there is clear instructions, people make strange mistakes. This is a great example LAMB, You, et al but people still miss it.

End use

You will soon discover shortcomings in the series of decisions you have made to get to this stage. You notice them when you test on different "fine-tuning" tasks. Down the rabbit hole we go!

Most often I try to find someone who has tried to solve similar tasks and check how the model has been fine-tuned or I go back and check settings for the task in GLUE that is most similar and work from there.

AI gets a better score than 80% of Swedes on our advanced test for language understanding

What does this new language understanding mean for us?

Viktor Alm

I like to build stuff

Year 2020

Technology is advancing by leaps and bounds. Digitization is still something many organizations are actively working to introduce. At the same time, there is this little world that takes digital processes to the next level through AI and automation.

NOW you can evaluate an AI on the same language tests as humans and get a better result than a majority of them. How will this affect how we work with languages? I think that almost every text process is affected by these new advances.

Cooperation

Although I believe that some classification models can be left without direct monitoring with only regular follow-ups. I strongly believe in using "AI" to maximize individuals' capacity. This is a revolution that, like industrialism, will increase productivity. If you could 3x or 4x the throughput of workers in these processes, you have gained a lot. In many cases, it is definitely already possible with what we have today. In the short term, it is more about collaboration than removing people from processes. It is more about producing the right data and understanding for the upcoming handover.

The tragic truth is that many of the organizations that has language processes that could be enhanced rarely have the possibility to utilize this new progress easily. The infrastructure is extremely inflexible and it is rarely the correct data that is saved from the processes.

Teacher

What does this mean for a teacher who scores texts daily? It is likely that AI already gets better results on reading comprehension than many teachers in this country. Is it fair to students to be judged by people who have difficulties with reading comprehension? If you also extend it to concentration and carelessness?

Is it fair to force these teachers to read and score? More time and energy could be spent focusing on the students. Make them feel noticed. More coaching. Why not let student interact with a model that provides direct feedback. To teach them how to work with deep-learning models at an early age and to foster critical thinking of results without anyone taking it personally and avoiding admitting mistakes.

If we take history as an example, how many teachers have not sat and evaluated texts about the fall of the Roman Empire or the Civil War according to a certain criteria. The data processes are there and on a national scale you could be very efficient. Even more so now that the models make it possible to switch between languages almost seamlessly.

Customer Support

Different telephone support lines are often just a human interface to different knowledge databases and special permissions that the customer does not have. How many support workers are a part of the group of people with top 70-85% in reading comprehension? In the cases that do exist, it is rare for them to even be able to use this as there are hardly any tools available for them to work with and search for information. It takes time and experience in a profession that is often seen as temporary.

There is a lot to be gained in efficiency by providing support articles to the customer through various models that act as interfaces with some smart engineering to create a feeling that someone is helping them. Get information and an interface for case management that can be processed further automatically where the customers themselves does not have permissions.

Administration

Various incoming texts in emails and forms that often consist of simple "actions" that must be performed or delegated to the right person, or some form of assessment according to set criteria must take place. With the right data and models, this is almost trivial to achieve.

Writing

Transformers is scary good at predicting the next token . This means that they are fantastic tools when it comes to writing. If you combine language comprehension and text generation, you get different types of translators. It can be between different languages. It can be between different styles of writing or models for writing summaries.

Journalist

A model that is tailored for you and how you want to write will help you by predicting the next word. It is trained on your favorite authors and yourself to better understand how you want to write. An autocorrect on steroids. I will show some demos on this soon. This is great if you are a writer or journalist, direct feedback and a sounding board in the style you want. You focus on the message and research.

Communications Officer

In an organization, there may be different nodes that convey information. Selects relevant pieces and boils it down to the goodies. Perhaps texts are generated based on tables or other data that can be more easily interpreted in a short format.

Copywriter

How do you reach an individual customer in the best way? Through personalized messages of course. With the right data, this is very feasible.

Advice

I see time and time again people who want to do "AI" but it is rarely any of the new break-through models that are implemented. There is a great shortage of skill in Sweden. "AI" is a hot topic that unfortunately attracts a lot of bullshit. It takes a lot of resources and real progress or unique models are very rarely made on a laptop. Consulting companies are looking to sell hours and not solve problems. The turbulence around the various advances means that everyone is behind and it is extremely time-consuming to keep up even if you have the right conditions.